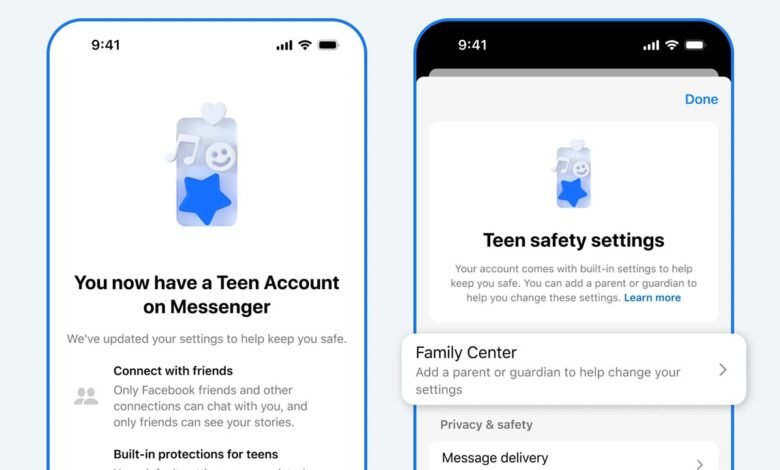

With the Communications Authority of Kenya (CA) moving toward enforcing child online safety rules by October 2025, the world’s biggest tech companies are already making visible shifts. Meta, the parent company of Instagram, Facebook and Messenger, has now expanded its Teen Accounts protections beyond Instagram and rolled them out globally across Facebook and Messenger.

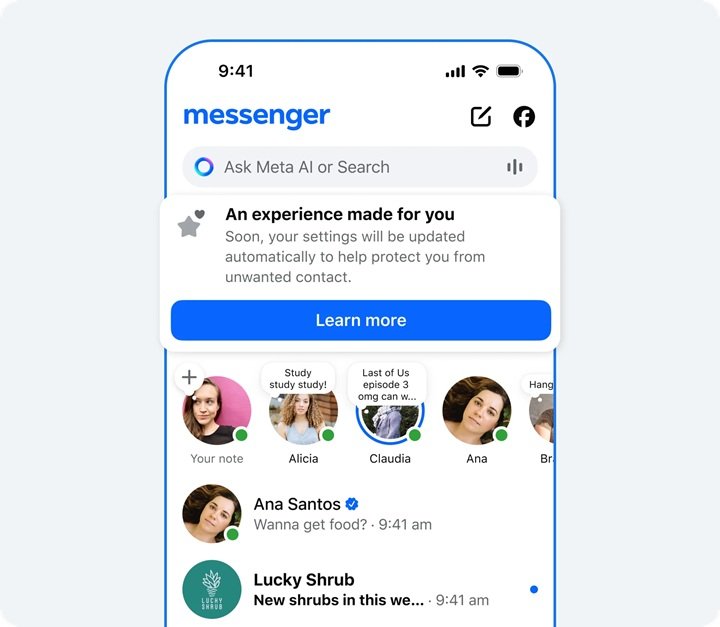

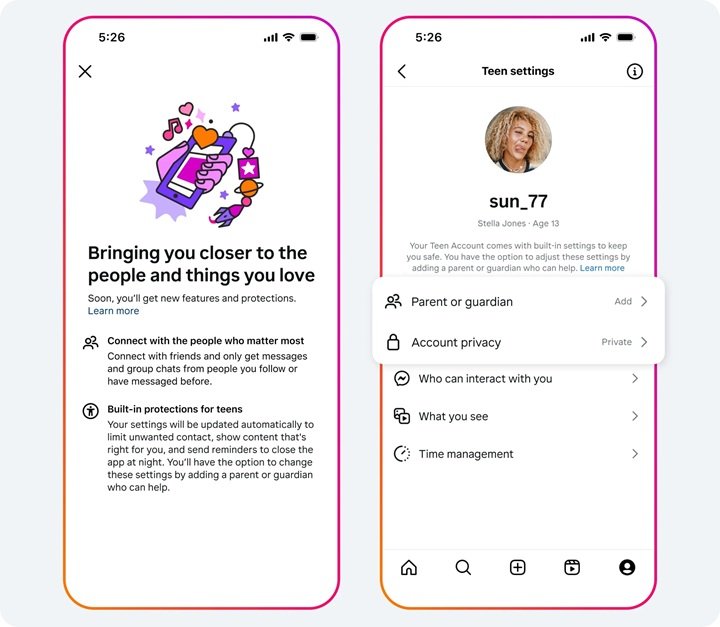

Teen Accounts were first introduced a year ago on Instagram as part of a broader effort to make online experiences safer for young users. Meta says it has already placed hundreds of millions of teens under these protections on Instagram, and the same framework is now being extended to all teens using Facebook and Messenger worldwide. The protections tighten defaults around privacy and safety by limiting who can contact teens, restricting exposure to sensitive content, and curbing potentially harmful interactions.

According to Instagram head Adam Mosseri, the company wants parents to “feel good” about their teens using social media platforms. He noted that while teens use apps like Instagram to connect with friends and explore their interests, those experiences shouldn’t come at the cost of safety. Over the past year, Meta has added more controls, such as restrictions on going Live, stronger age-verification measures and stricter messaging limits for teens.

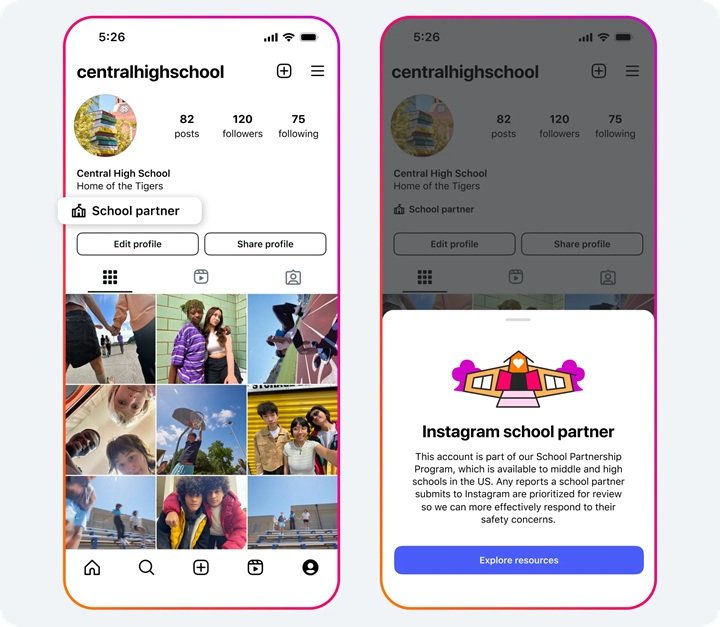

But the company isn’t stopping at in-app protections. Recognizing that schools and educators play a major role in reporting harmful online behaviour, Meta has launched a School Partnership Program. The initiative, currently available to middle and high schools in the United States, allows educators to report problematic content such as bullying directly to Meta for fast-tracked review. The company claims it aims to handle such reports within 48 hours.

Schools that sign up gain priority reporting channels, access to social media safety resources and a visible badge on their Instagram profiles to signal official partnership. Early pilots reportedly received positive feedback from administrators who said the program helped them act quickly on harmful content involving students.

To complement enforcement efforts, Meta is also scaling up digital literacy initiatives. It has partnered with child safety organization Childhelp to roll out an online safety curriculum tailored for middle school learners. The curriculum, which is fully funded by Meta and offered at no cost, includes scripted lessons, facilitator guides, interactive activities and videos that teach children how to spot online exploitation, scams and inappropriate behaviour. The company expects to reach one million students soon, partly through a peer-learning model that enables trained high school students to teach younger learners.

These developments come at a time when regulators, including the Communications Authority of Kenya, are widening the scope of responsibility around child safety online. Kenya’s upcoming guidelines don’t just target global platforms like Facebook or Instagram but also pull in telcos, ISPs, hardware makers, app developers, EdTech platforms and broadcasters. The expectation is that safety features are built into platforms by design, not offered as optional extras.

By broadening its Teen Accounts protections across all its platforms and engaging schools and educators, Meta appears to be positioning itself ahead of regulatory pressure. And as Kenya’s enforcement deadline draws nearer, other tech players—local and international—will likely face similar expectations to redesign their platforms with child safety baked in from the start.